分享基于asyncio及aio全家桶, 实现scrapy流程及标准的轻量框架 | python作品分享 -大发黄金版app下载

项目简介(来源于):

- 该框架基于开源框架scrapy 和scrapy_redis, 标准流程开发通用方案, 比scrapy框架更为轻量使用场景自定义方案更为丰富

- 实现了动态变量注入和异步协程功能, 各个节点之间分离使用, 简化scrapy内置拓展功能, 保留了核心基本用法。

- 内置分布式爬取优先级队列redis, rabbitmq等。

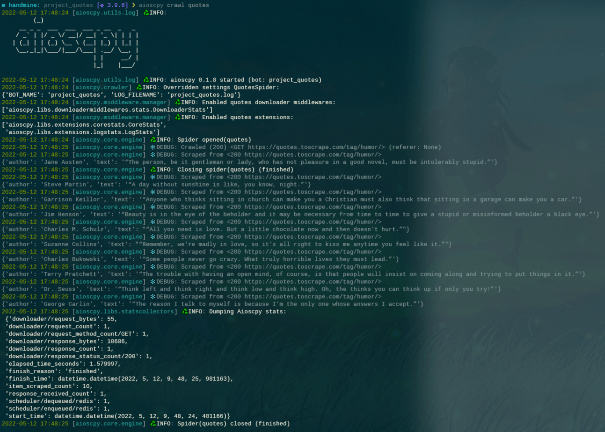

运行截图:

单脚本爬虫:

from aioscpy.spider import spider

from anti_header import header

from pprint import pprint, pformat

class singlequotesspider(spider):

name = 'single_quotes'

custom_settings = {

"spider_idle": false

}

start_urls = [

'https://quotes.toscrape.com/',

]

async def process_request(self, request):

request.headers = header(url=request.url, platform='windows', connection=true).random

return request

async def process_response(self, request, response):

if response.status in [404, 503]:

return request

return response

async def process_exception(self, request, exc):

raise exc

async def parse(self, response):

for quote in response.css('div.quote'):

yield {

'author': quote.xpath('span/small/text()').get(),

'text': quote.css('span.text::text').get(),

}

next_page = response.css('li.next a::attr("href")').get()

if next_page is not none:

yield response.follow(next_page, callback=self.parse)

async def process_item(self, item):

self.logger.info("{item}", **{'item': pformat(item)})

if __name__ == '__main__':

quotes = quotesspider()

quotes.start()github地址: